NVIDIA used CES 2026 to clarify a shift that has been building across several product cycles: the company is no longer presenting AI compute as a collection of individual accelerators, but as a rack-scale system designed and developed as a single unit. The clearest expression of that strategy is Vera Rubin NVL72, a rack-level AI system that brings together new CPUs, GPUs, interconnects, networking, and infrastructure processors into what NVIDIA describes as an integrated “AI Supercomputer.”

Rather than framing Rubin as a conventional chip launch, NVIDIA positioned it as a platform built to address the operational realities of modern AI workloads, where performance is increasingly shaped by power availability, data movement, system efficiency, and security boundaries, not raw compute alone.

Above: A photo of NVIDIA's Vera Rubin Architecture on a Video Display at CES 2026 in Las Vegas. Photo by David Aughinbaugh II for CircuitRoute.

From Components to Systems

At the platform level, Rubin is defined by six major silicon elements designed together rather than independently: the Vera CPU, Rubin GPU, NVLink 6 switch fabric, ConnectX-9 SuperNICs, BlueField-4 data processing units (DPUs), and NVIDIA’s latest Spectrum-class Ethernet and Quantum-class InfiniBand networking. The intent is to reduce friction between compute, networking, and infrastructure control, allowing the rack itself to function as the primary unit of scale.

Vera Rubin NVL72 is the first concrete system NVIDIA has presented under that model. The rack integrates 72 Rubin GPUs and 36 Vera CPUs, connected internally via NVLink 6 and externally through high-bandwidth Ethernet and InfiniBand fabrics. NVIDIA positions NVL72 as an evolution of its prior MGX-based rack designs, emphasizing deployment continuity while increasing performance density and system-level capability.

This emphasis on rack-scale design reflects a broader reality facing AI operators. As model sizes grow and inference becomes more interactive and latency-sensitive, performance gains increasingly depend on how efficiently systems move data across GPUs, nodes, and racks rather than on improvements to individual processors.

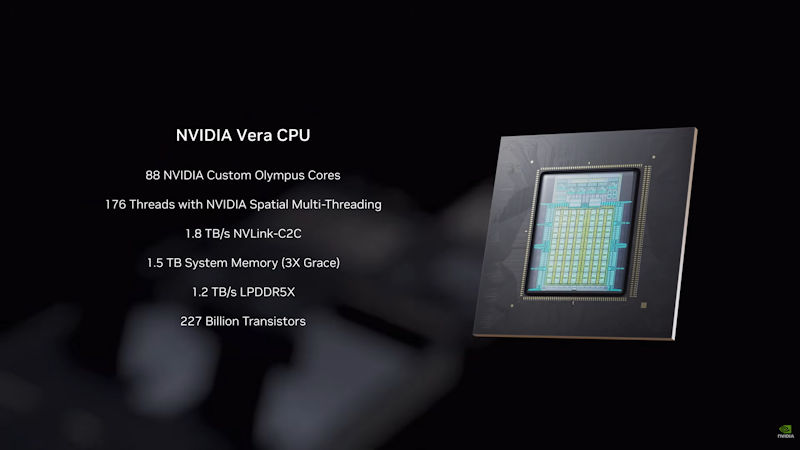

Above: An info graphic containing information and specifications on NVIDIA's Vera CPU.

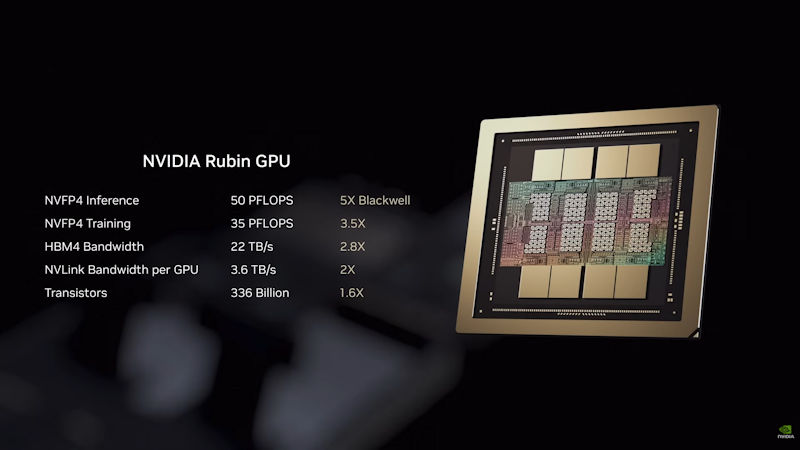

Above: An info graphic containing information and specifications on NVIDIA's Rubin GPU.

Why NVIDIA Built Rubin

During his CES presentation, NVIDIA President and CEO Jensen Huang framed Rubin as a response to a structural challenge facing the industry. “Vera Rubin is designed to address this fundamental challenge that we have,” Huang said. “The amount of computation necessary for AI is skyrocketing.”

That framing presents Rubin as a system-level platform rather than a single generational product update. Across its CES materials, NVIDIA emphasizes efficiency metrics such as inference cost, power density, and system utilization alongside performance, rather than focusing solely on peak theoretical throughput.

NVL72 and Scale-Up Connectivity

A central element of the Rubin platform is NVLink 6, which NVIDIA states provides 3.6 terabytes per second of bandwidth per GPU, with an aggregate of roughly 260 terabytes per second across the 72-GPU domain inside NVL72. NVIDIA describes the purpose of this fabric as enabling large groups of GPUs to operate within a shared performance domain, reducing overhead associated with synchronizing work across slower interconnects.

For scale-out workloads, NVL72 pairs that internal fabric with NVIDIA’s latest InfiniBand and Ethernet platforms, including Quantum-X800 and Spectrum-X. NVIDIA states that this combination is intended to support multi-rack clusters where training and inference workloads span hundreds or thousands of accelerators, without introducing bottlenecks at the rack boundary.

Above: A photo of NVIDIA's AI Supercomputer NVL72 Rack on display at CES 2026 in Las Vegas. Photo by David Aughinbaugh II for CircuitRoute.

NVIDIA has stated that Rubin is designed to support a mixture-of-experts (MoE) and agentic AI workloads which often involve more complex communication patterns between processing elements. In that context, NVIDIA places particular emphasis on interconnect bandwidth and latency as system-level considerations.

Power, Cooling, and Operational Constraints

NVIDIA has stated that Vera Rubin delivers roughly twice the compute power of Grace Blackwell while maintaining similar airflow and water temperature requirements. According to the company, the system is designed to operate without chilled-water infrastructure, allowing higher-density configurations to be deployed without changes to existing data-center cooling systems.

This approach addresses a common constraint faced by data-center operators as system densities increase. By avoiding the need for lower temperature chilled water, Rubin based systems may reduce the need for facility-level cooling modifications when deploying higher-density AI infrastructure.

NVIDIA has noted that these efficiency characteristic are based on internal workload scenarios and that real-world results will depend on model architecture, system configuration, and deployment conditions.

Scope and Limitations

Across its CES materials and technical documentation, NVIDIA has taken a measured approach in describing Rubin’s capabilities. The company publishes scenario based comparisons that suggest improved training efficiency and lower inference cost per token relative to Blackwell-based systems, while emphasizing that these figures are projections rather than universal guarantees.

NVIDIA’s official product documentation and press releases do not include confirmed customer deployments, specific shipment dates, or independently verified benchmark results for Rubin-based systems. Rubin is presented as a platform direction, with NVL72 described as a reference implementation rather than a fully defined product lineup with fixed delivery milestones.

How NVIDIA is Framing AI Infrastructure

Viewed through NVIDIA’s CES 2026 disclosures, Rubin and Vera Rubin NVL72 illustrate how the company is framing the next phase of AI infrastructure. NVIDIA emphasizes systems, racks, and data-center level integration alongside individual processors.

Across its CES 2026 materials, NVIDIA associates this approach with challenges commonly discussed in large-scale AI deployments, including rising compute requirements, power availability, security considerations, and data-movement efficiency. Whether Rubin ultimately delivers on NVIDIA’s projections will depend on deployment experience and operational results. Rubin is presented as a tightly integrated platform designed to operate at a scale, rather than as a collection of standalone components.